WEST LAFAYETTE — Deepfake videos of actor Tom Cruise on Tik Tok can create some confused fans. A deepfake video of a drug company CEO announcing COVID-19 vaccine failures, however, could cause panic.

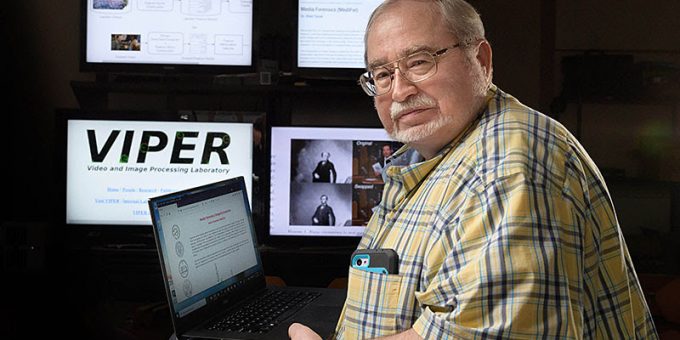

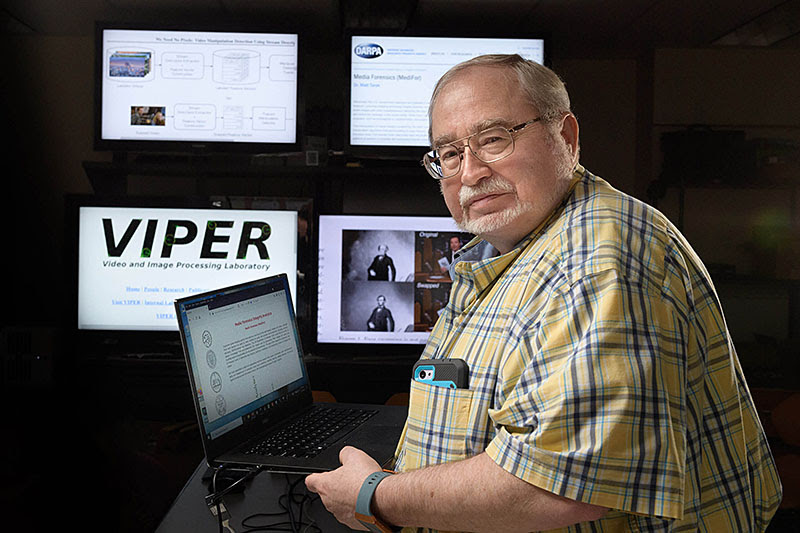

Ferreting out deepfake videos is the work of Edward Delp, the Charles William Harrison Distinguished Professor of Electrical and Computer Engineering at Purdue University, who is leading one of the teams in the Semantic Forensics program created by the Defense Advanced Research Projects Agency for the U.S. Department of Defense.

The program, which has been ongoing since late last year, is a continuation of Delp’s innovative previous work into tools to detect deepfakes and manipulated media. Only now the research is extending much further to include other video styles and media such as images and documents.

“Not only do we want to be able to detect when a piece of media has been manipulated, but we want to be able to attribute it: Who did it, why did they do it and what was their intent?” said Delp, director of the Video and Imaging Processing Laboratory at Purdue. “It’s called detection, attribution and characterization. We’re trying to solve the whole problem.”

Delp has worked on video tampering as part of a larger research into media forensics, focusing on sophisticated techniques based on artificial intelligence and machine learning to create an algorithm that detects deepfakes.

For this program, Delp is overseeing a team of technical experts from the University of Notre Dame, University of Campinas in Brazil, and three Italian universities: Politecnico di Milano, the University of Siena, and the University Federico II of Naples.

The team has complementary skills and backgrounds in computer vision and biometrics, machine learning, digital forensics, as well as signal processing and information theory.

The team is moving away from studying deepfakes that concentrate only on faces, instead looking at fake street scenes and fake biological imagery like microscope images and X-rays.

“Think about the insurance fraud if you have a doctor who could generate fake X-rays of patients and start turning those into the insurance companies,” Delp said.

Something as seemingly harmless as a driver putting a camera in a car to record heading down the road can have other applications. Delp said the video can be manipulated to make it look like it’s a different road or neighborhood and used to try and fool an autonomous vehicle system.

Delp said the team is doing some unique things with document validity, particularly with regard to research papers.

“One of the things we’re looking at is, with the COVID pandemic there has been a lot of scientific papers put forward that have falsified data,” he said. “We are building a tool that will look at a scientific paper; in particular look at the images and figures and determine whether they’ve been manipulated.”

Delp said the problem, called research integrity, has been an ongoing issue for almost 15 years, particularly in the biomedical community.

Also being examined are articles trying to be passed off as originating from specific newspapers. Delp said the work is called style detection and examines the writing style guides of a number of nationwide newspapers.

Delp said the premise is if someone writes an article and then tries to fake it, saying it comes from the New York Times; they probably did not read the style guide and will not have the appropriate word usage as a result.

Style detection is one of the team’s long-term projects.